Not “Co” Anymore For Me!

June 2017

One of the earliest posts I wrote on this blog was back in the month of June, in 2017. It was a post titled “Complements and Substitutes“.

A simple post about a basic concept in economics, and that is pretty much all there is to that post. But I was reminded of that post today morning, and I am going to spend the rest of today’s post explaining why I was reminded of it, and why I being reminded of it should worry you. A lot.

We’ll get there. For now, here’s one sentence from my post about complements and substitutes that I’d like you to keep in mind:

When the price of a close substitute goes up, the demand for the good in question rises, and vice versa.

Just in case you’re confused about what this means in practice, here’s a simple way to think about it: if Coke doubles in price, what do you think will happen to the demand for Pepsi?

Makes sense? Great, hold on to that thought, and come along with me on a ride.

April 2024

In April 2024, I wrote up a review of a book called Co-intelligence, by Ethan Mollick. Ethan is a professor at Wharton, an AI researcher, and a guy you absolutely must follow online to keep abreast of where AI is going.

In that post, I had Claude Opus write me an explanation of the Lovelace Test. I asked it to tell me about the Lovelace Test in a way that ensured it would pass the Lovelace Test. It did it, of course, and it didn’t do a bad job, exactly… but in September 2025, we can only look back at LLM outputs from back then with something approaching pity.

Or something approaching nostalgia, maybe, because my post from April 2024 contained a description of Ethan’s four rules for working with AI:

Always invite AI to the table

Be the human in the loop

Treat AI like a person

Assume this is the worst AI you will ever use

One of the reasons I’m writing today’s post is because it turns out that following the fourth and the first rule make it really difficult to follow the second one.

April 2024-September 2025

What has changed since my post about Ethan’s book and today?

Today, I can upload my blog post from back then into NotebookLM. In no time, I can generate a video overview, an audio podcast, a mind-map, an FAQ, a briefing doc and flashcards. I can specify the type of podcast I want (do I want a debate, or a critique?). All of this in multiple languages, of course. I can specify the type of podcast I want (of course I can). I can ask for a debate or a critique. I can specify the length, and I can specify something that the podcast should really drill down upon. Does that get one a passing grade on the Lovelace test?

Ethan Mollick himself is famous for his many different Lovelace tests. His otter series is legendary. He’s got an entire blogpost about his otter-worldly journey (sorry), but I’ll just pick three from the series to give you an idea of how far we’ve come.

Here’s the very first one, from 2021 (this is the pre-GPT era):

Here’s Nov 2022:

And here’s what we could do in June 2025:

June 2025 is ancient history, of course. We now have nano-Banana, Google’s latest image editing tool. If you haven’t tried it yet, I urge you to give it a go.

Drop the Co

The title of today’s post may make you think that the inspiration comes from Ethan reflecting on and updating his take on his own book. And you’d be partially right, because Ethan has written a blogpost that we are going to talk about.

But the title? That’s a direct excerpt from a tweet today by Derya Unutmaz:

I’ve been beta-testing @GoogleDeepMind’s AI Co-Scientist for quite some time, and it’s so incredible I can’t stop raving about it to my colleagues! In fact, I told Vivek and the rest of the amazing team who built it that I now call it the AI SCIENTIST, not “Co” anymore for me! https://t.co/0HeNHkFLjI

— Derya Unutmaz, MD (@DeryaTR_) September 11, 2025

And in a weird coincidence, as I mentioned, Ethan Mollick wrote a post saying much the same thing, but at much greater length:

In my book, Co-Intelligence, I outlined a way that people could work with AI, which was, rather unsurprisingly, as a co-intelligence. Teamed with a chatbot, humans could use AI as a sort of intern or co-worker, correcting its errors, checking its work, co-developing ideas, and guiding it in the right direction. Over the past few weeks, I have come to believe that co-intelligence is still important but that the nature of AI is starting to point in a different direction. We’re moving from partners to audience, from collaboration to conjuring.

As I messaged a friend today morning after I read the piece, Ethan is really saying that AI is fast moving from being a complement to our work, to being a substitute for it. And not just “a” substitute. A much better substitute:

Right now, no AI model feels more like a wizard than GPT-5 Pro, which is only accessible to paying users. GPT-5 Pro is capable of some frankly amazing feats. For example, I gave it an academic paper to read with the instructions “critique the methods of this paper, figure out better methods and apply them.” This was not just any paper, it was my job market paper, which means my first major work as an academic. It took me over a year to write and was read carefully by many of the brightest people in my field before finally being peer reviewed and published in a major journal.

Nine minutes and forty seconds later, I had a very detailed critique. This wasn’t just editorial criticism, GPT-5 Pro apparently ran its own experiments using code to verify my results, including doing Monte Carlo analysis and re-interpreting the fixed effects in my statistical models. It had many suggestions as a result (though it fortunately concluded that “the headline claim [of my paper] survives scrutiny”), but one stood out. It found a small error, previously unnoticed. The error involved two different sets of numbers in two tables that were linked in ways I did not explicitly spell out in my paper. The AI found the minor error, no one ever had before.

Now would be a good time to re-remember that sentence I asked you to hold on to. Here it is again, for your reading pleasure:

When the price of a close substitute goes up, the demand for the good in question rises, and vice versa.

Here’s two things for you to chew on.

First thing: does this statement work in reverse as well? If the price of a close substitute goes down, what happens to the demand for the good in question?

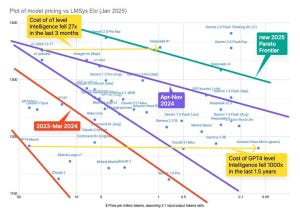

Second thing: what is this chart telling you?

Is the price of intelligence going up, or down?

Again, think back to complements and substitutes. As the price of intelligence continues to drop, some jobs that we do today are going to be outsourced to AI. Others, where the price/quality differential is not so favorable (to the AI) will see continued reallocation to humans. But if there is one thing that you should take a given about this, it is the dynamic nature of the argument. Things will change very, very quickly!

Please do spend some time thinking about your answer to these three questions:

Is AI a complement or a substitute?

Is it getting cheaper or more expensive over time?

What does this mean for us?

Skate To Where The Puck Is Going To Be

Now that you have thought about those questions, think about a chart and a tweet:

Please note that the vertical axis is a log scale. In English: each unit move upwards is a 10x improvement, each unit move rightwards is a 1 year increment.

Still in English: model capabilities have gone up 10x every year, for the last five years. Now sure, you can nitpick the details of the chart. But hopefully we’re in agreement about the broad direction of the trend. And speaking of trend…

What do you think model capabilities will look like in the next five years?

I think a lot of gap between people who “get” LLMs and people who don’t is that some people understand current capabilities to be a floor and some people understand them to be either a ceiling or close enough to a ceiling.

— Patrick McKenzie (@patio11) September 2, 2025

So: Should you be worried?

That is your call. For the record, I am.

But you should certainly be informed, and that is what this post was about.